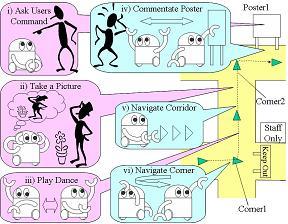

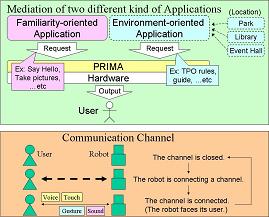

This research presents a middleware architecture for personal robots applied to various environments. The architecture allows a user's own robot to consistently integrate environment-oriented applications with its original and familiar personality in its own application. These applications should share its sensors and actuators, and should generate consistent actions in sequence. However, integration of two different kinds of applications which have been independently developed causes lack of its continuity and response speed in human-robot communication. To solve these problems, we have designed a new middleware for personal robots, called PRIMA (Personal Robots' Intermediating Mediator Adapting to environment) which can schedule these applications' outputs to keep human-robot communication channel. We call the integration of these applications as personalization of dynamically loaded service programs, and had an experiment demonstrated the effect of PRIMA. [Kobayashi04, Kobayashi03b, Kobayashi03a]

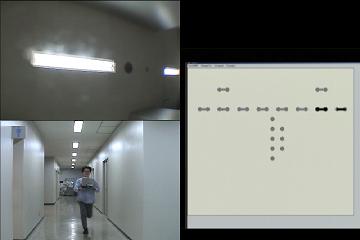

This research proposes a real-time self-localization method in indoor environment with a monocular camera toward the ceiling, which is robust against the variation of the position, orientation, and velocity of the camera and occlusions. In this method, the IDs of light sources are identified by geometric calculation using their features extracted from the ceiling image and the map, and tracked from frame to frame. A detailed self-position is calculated using correspondence of the feature points between the ceiling image and the map only when it is demanded. The system can also cope with the case in which the user himself/herself enters into the frame of the camera by setting a special area in the camera frame where the ceiling image can be obstructed. To confirm the feasibility of the proposed method, experimental results in the office environment are shown. (DEMO1 DEMO2 DEMO3)

This paper presents a new robot navigation system that can operate on a sketch floor map provided by a user. This sketch map is similar to floor plans as shown at the entrance of buildings, which does not contain accurate metric information and details such as obstacles. The system enables a user to give navigational instructions to a robot by interactively providing a floor map and pointing out goal positions on the map. Since metric information is unavailable, navigation is done using an augmented topological map which described the structure of the corridors extracted from a given floor map. Multiple hypotheses of the robotfs location are maintained and updated during navigation in order to cope with sensor aliasing and landmark-matching failures due to factors such as unknown obstacles inside the corridors. [Setalaphruk03, Vachirasuk02]

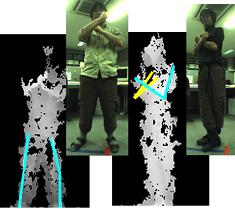

This research proposes an estimation method of human postures with self-occlusion by using a 3-D camera image and a color image. This method recognizes standing human postures which allow occlusions between two arms and body, and two legs. In this paper, human posture estimation is defined as 3-D positions and correspondences major joints, i.e., wrists, elbows, shoulders, hip joints, knees, and ankles. This estimation method solves self-occlusion problems by deciding joint candidate regions, and deciding correspondence of joints by calculating continuity of distance. The proposed method is validated by recognizing 18 postures on 5 subjects.

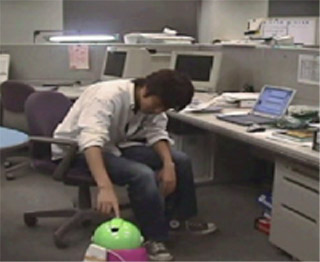

This research presents a new conceptual model where a robot decides to initiate communications with human beings by monitoring their behaviors in their environments. In this research, we have collected a set of image data about human's working behaviors around his desk of our laboratory room. By analyzing these image data, we have found several functions to judge whether he can be intervened by a robot on not. And then, we have implemented these decision functions by image analysis techniques. Some experimental results have shown these functions are good enough to initiate communications between human and robot.

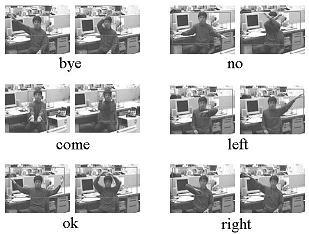

Communications by gestures are promising for a natural human-machine interface. We propose a new simple method to recognize repetitive gestures in office environment. Our method can recognize gestures regardless of the place in view and the distance from the camera. In our method, regions of interest are segmented by observing the change of differences between image frames, and each gesture is recognized by comparing the correlation coefficient matrix between nine segments in a region of interest with the standard ones corresponding to each gesture. Experimental results have shown that six kinds of gestures performed at four different ranges could be recognized with more than 80 % accuracy.